Attacking the face recognition authentication – how easy is it to fool it?

This article will show you how we have managed to bypass face recognition used by among others financial industries as a biometric solution followed by ways to make it more secure.

In recent weeks we had a lot of fun working on various facial biometrics solutions. There were a lot of different approaches to it’s implementations so our approach was not standard either.

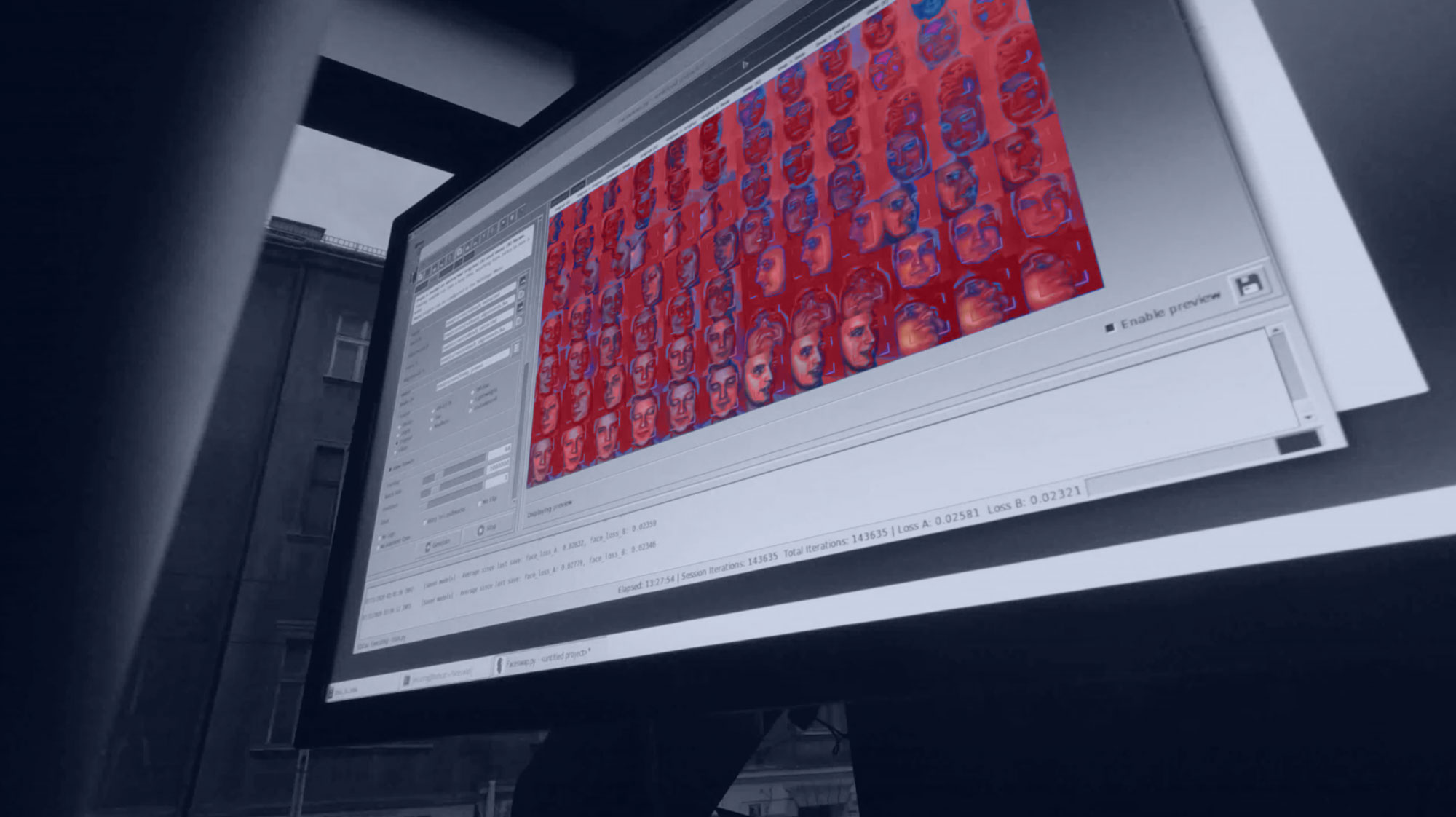

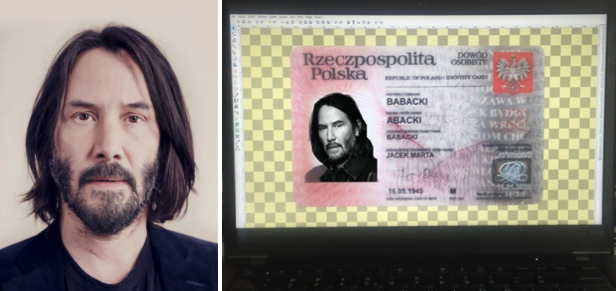

Here is a little preview:

This article will show you how we have managed to bypass face recognition used by among others financial industries as a biometric solution followed by ways to make it more secure. We will go through the following points to have a broader perspective of the whole case:

- How does biometry exactly work?

- Threat model – who and why attack these systems?

- Attack vectors – biometric and classic

- Methods of attacking biometric vulnerabilities

- Vulnerabilities we have found

- Securing biometric solutions

- Consequences

- Summary

Intro:

At the beginning of 2020 the economy sector brought new needs resulting from widespread social isolation, and with them an increased interest in facial recognition solutions. Biometrics based on a camera input allows recognition, comparison and verification of people which is widely used by airports, government facilities and social media hence it is also being deployed in mobile banking.

Widely available technology that uses face biometrics makes it more popular among service providers who begin to use it for authentication. So far solutions were run in controlled environments that minimize the risk of possible abuses. However, now it is required to run it directly on end-user devices. Confirming identity needs to be extremely simple so that every user can handle it.

This approach will surely drive the costs down and limit required interactions (e.g. visiting a bank branch or talking to a courier which verify our identity). Also, it is possible to use biometrics as one of the authentication components of a registered user at a later stage e.g. to change key account settings.

How does biometry exactly work?

The most popular way to verify facial biometrics is to generate a signature consisting of geometry and main features including eyes, nose, lips and their distribution on face. Detecting the similarity of two faces is possible by comparing signatures that have been created using mathematical formulas so that they are not sensitive to the user’s aging, changing his beard and hairstyle or body weight.

Authentication mechanisms use a signature comparison algorithm to decide, based on the degree of similarity, whether we really look like the person we claim to be. Due to the complexity of the problem, it’s not possible to clearly answer (yes / no). A decision is made by the executing system whether a degree of facial similarity is acceptable while taking into account possible differences in appearance. The key to the effectiveness of the system is to set a limit where the smallest number of incorrectly classified cases can be found (false-positive and false-negative ratio). There is no known perfect algorithm that would definitely say if the face in two photos actually belongs to the same person.

There are many cases where publicly available biometrics-based systems have failed, e.g., voice authentication introduced by HSBC, which was deceived by a BBC reporter and his brother changing his voice to obtain authorization by the system.

Threat model – who and why attacks these systems?

The main purpose of attackers of biometric systems is to impersonate another or non-existent person to gain authenticated access to resources without revealing their true identity. The most common case of identity theft is taking a short-term loan in small companies in which the verification process is short and requires only the presentation of a valid ID card. There are also other public and financial institutions that are heavily exposed to attacks due to possible reputation and money losses.

Biometric solutions are rapidly growing. Their task is to facilitate and automate the entire process of creating and authorizing accounts. Up until now, every user setting up a bank account had to go to a bank branch or wait for a courier who would bring the relevant documents and verify the identity on the basis of a valid identity document.

Biometric verification allows you to open an account at home using mainly a phone on which the application has been installed. Along with new solutions, potential risks arise for attacking such systems.

Attack vectors – biometric and classic

During the penetration testing of biometric solutions, we divided the possible attack vectors into three categories:

- Biometric vulnerabilities – those that occur in the algorithm of face detection, signature generation and liveness verification,

- Environmental vulnerabilities – those that result from running the algorithm on the user’s end device,

- API vulnerabilities – those that result from the modification of communication between the user and the identity verification server.

Methods of attacking biometric vulnerabilities

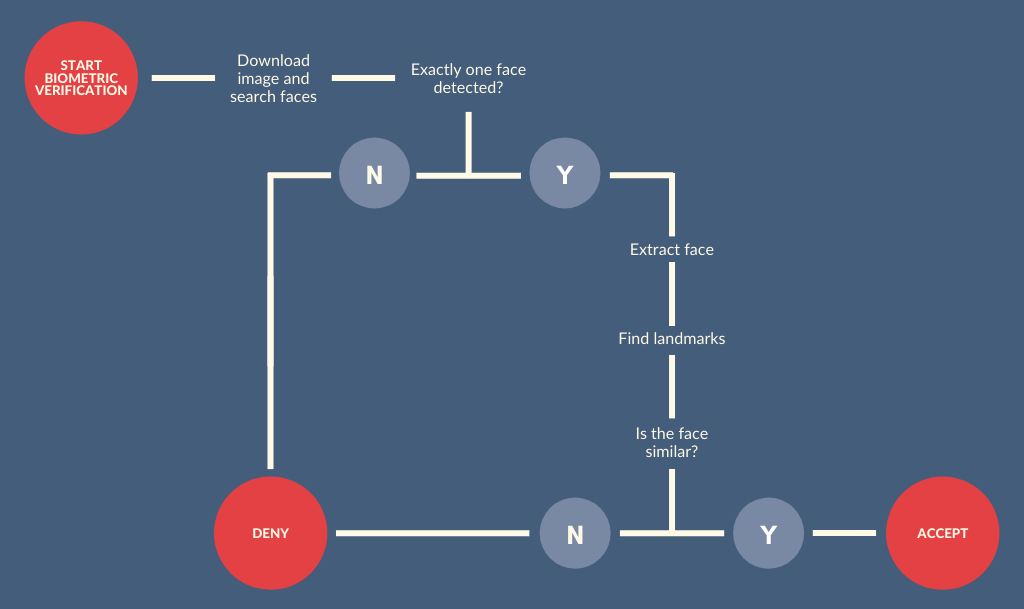

The operation’s method of the face’s recognition we can generalise to the following diagram:

In addition, the system can perform liveness checks which detect if the face is moving, e.g. if a person blinks or ask the user to perform certain gestures and observe whether the face features remain coherent.

Assuming that our goal is to fool the image recognition system designed for this one specific purpose and there is no manual verification performed by humans so we can focus on presenting expected face and providing required changes in the image.

“Analog methods”

Before using more advanced techniques, we started with the most primitive ones. A simple mask printed on paper is enough to present a given face into the system without modifying the application nor the device. Bypassing the liveness check sometimes required some additional steps, like rolling one’s eyes or bending the printout.

Despite the fact that it looks ridiculous even this procedure was sufficient to fool one of the solutions we’ve tested.

“Digital methods”

The use of more complex and random gestures in liveness check requires the possibility of face manipulation in the video stream.

We’ve used the open source project called faceswap which allowed us to replace existing faces with digital generated using deepfake technique. Initial trials have shown that the quality and diversity of a person’s photos is crucial to the success in learning our AI model which is focused on swapping designated faces. For the easiest cases, like a short video of a front facing person, recording a casual conversation in a cafè using your iPhone camera will provide enough training material. Providing insufficient amounts of images containing various face angles into the learning phase might result in really bad quality of output video during the generation process.

Another possibility to achieve the same goal is to use software for motion capture and facial animation which is professionally used in the film industry.

A significant technical challenge was to provide a modified video to the phone. For Androids platform it was possible to modify the application to use an external camera (or a device that impersonate it) however things get more complicated when it comes to iOS which required to perform less sophisticated tricks:

Common vulnerabilities

So far, we have conducted a series of tests for several major banks that implement solutions for biometric verification during remote account creation without leaving home. In most of the tested cases biometric services were provided by external suppliers.

One of the most popular biometric verification solutions used by many applications performs server-side liveness verification by sending a recording for acceptance. Uploaded video is designed to test whether:

- The liveness challenge has been properly done,

- Person performing the challenge is similar to the one from the identity card,

- During the whole recording there is only one and the same person.

One of the test cases was to manipulate the recording in such a way that will successfully pass the verification of facial similarity despite the fact that the challenge will be performed by a different person. We managed to do this by mixing videos of a person to impersonate and an attacker performing liveness challenge.

At this moment we were very surprised by the result, which returned a very high similarity and properly performed challenge, while not reporting a problem with the possible manipulation of the recording.

After several attempts, we came to the conclusion that the facial similarity test is performed on the whole recording returning the highest detected similarity score.

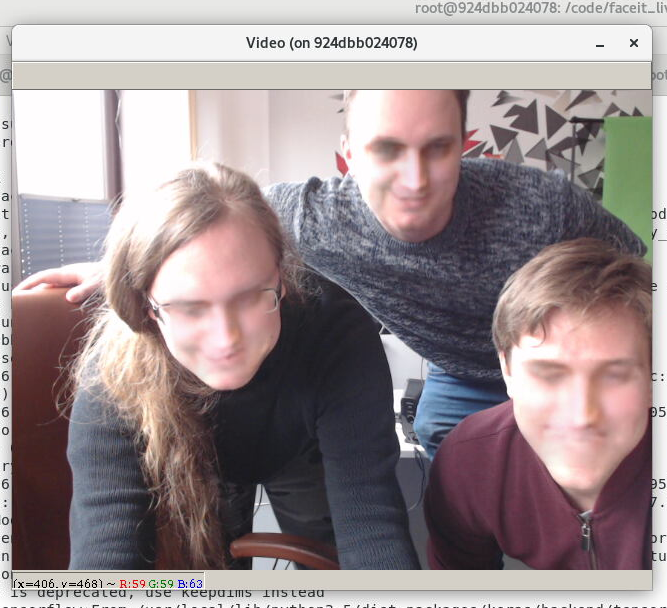

The above vulnerability was possible due to the lack or incorrect implementation of the mechanism to detect whether one and the same person is on the whole recording. That kind of vulnerability happens pretty often based on our experience. If exploited, this could bypass identity verification mechanisms and potentially pass the process with a fake identity card and just a few frames of the victim. In case this was quickly mitigated, one of the other ideas was to submit a video containing a computer-generated face. For this purpose, we’ve used the open source project faceswap, which allowed us to digitally swap the face on the recording.

As a result we have generated recordings containing only one person the whole time with digitally swapped face successfully performing all required challenges.

During tests of another solution it turned out that liveness check is carried only on client-side devices. This fact simplified bypassing the whole process by intercepting communication or searching API calls and creating a custom client to perform verification. We were able to achieve our goal by using both approaches allowing us to send modified pictures of identity card and selfie which was compared by biometric algorithms server-side.

We were able to perform successful verification using the above scenario. Actually why would this not work since Keanu from selfie looks pretty similar to the one from identity card right? 🙂

Securing biometric solutions

It is pretty hard to give the best solution on how to make biometric secure enough however we will try to point out a few places on which it is good to focus while implementing these systems.

- First and most important one: ALWAYS perform all checks server-side. It’s better if we always assume that user can bypass those,

- Require the user to perform randomly generated challenges from server containing visual and audio tasks while recording,

- Check if only one person exist during the whole recording and it is the same person from identity card,

- Instead of sending photos of identity cards create short recordings containing the same document with many angles which will make it harder to manipulate by attackers,

- To make it more difficult for rogue users to manipulate data, send it via encrypted communication protocol and obfuscate application code. Keep in mind that this step will only make it more time consuming to bypass it.

Consequences

We are aware of the fact that the human factor can also fail during direct contact with the person who requires identity verification. However, if someone uses a remote process it may turn out that this person will be never found by law enforcement authorities because of using the Internet and various tricks to hide its identity.

The use of possible vulnerabilities in remote authentication methods in financial institutions can have a tragic effect, such as theft of money from accounts or taking loans as other people without leaving home. In our opinion, one of the most likely fraud scenarios may be buying stolen identity cards from illegal sites in darknet and attempting to take loans.

Summary

Biometric systems are quite new, which makes them difficult to implement securely. This often results in errors that significantly facilitate a positive verification process. In automatic solutions, companies usually use AI to detect authenticity and possible modifications in input however the existing margin of possible error resulting from misclassification in the verification process might lead to false positives. Biometric always means probabilistic and it is never 100% bulletproof.

If you are currently working on your mobile application make sure to keep it safe by using our guide: Mobile Application Security: Best Practices. – you will find useful tips on how to secure you and your users.

We are pleased that you made it up to this point, if you want to be the first one informed about our future research, follow us on social media that you prefer, or just subscribe to our newsletter below 🙂

Special thanks to the co-author of the article: Jakub Kramarz