Before you implement Face Recognition to your app – AI hack study

In this article, we fooled popular Face Recognition APIs such as Azure Cognitive Services, Amazon Rekognition, and Face++. We show the pros and cons of using publicly available solutions and most importantly how to use them securely!

Use of Face Recognition in real life

In recent years facial recognition usage has significantly grown. Applications use it to recommend targeted content, based on detected face features which can be used to guess age, gender and ethnicity. Moreover, it’s possible to determine the user’s emotions like anger, disgust, fear, happiness, sadness or surprise.

In February 2021 TikTok agreed to a $92 million settlement to a US lawsuit which alleged that the app had used facial recognition to identify age, gender and ethnicity in order to recommend targeted content based on the user.

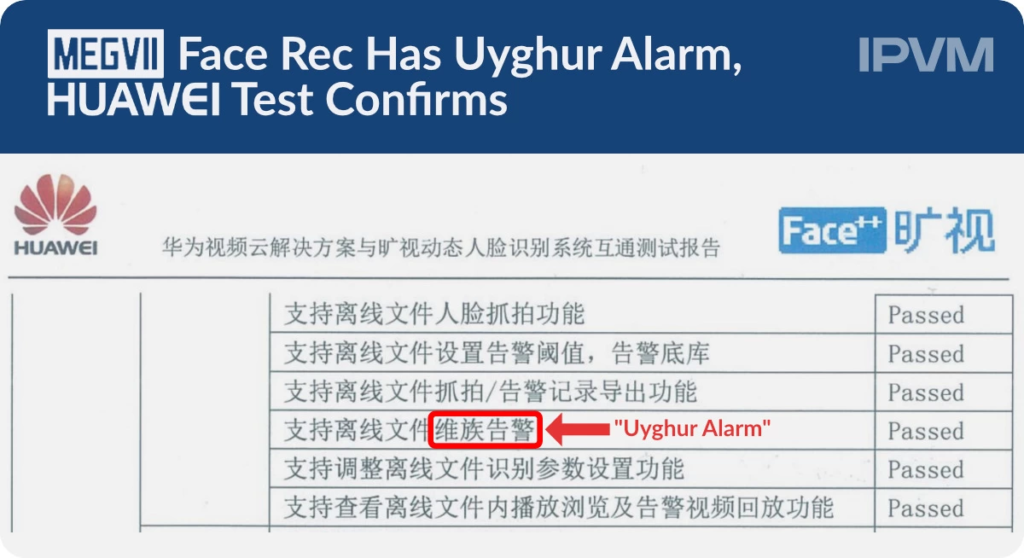

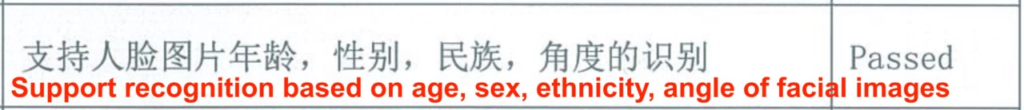

In 2020 Huawei accidentally released a test report that summarizes testing of Magevii Facial Recognition software (company involved in the Face++) on their video cloud infrastructure. An IPVM article confirmed that one of the tested features which passed the test was “Uyghur alarm” responsible for detecting minor ethnicities.

Another part of the report contained information that software was able to recognize age, sex and ethinicy of detected people.

External providers of face recognition technology – pros and cons

Using publicly available services lowers effort put in the development process of DNN (deep neural network). External providers often are in possession of large amounts of data which allows them to create a trained model with a lot of examples making it more accurate.

On the other side we have to give our data to the provider and we never know what they will use it for. In case of projects that are focused on security e.g. entrance to the building or application authorization using face recognition this would be never an option because of security requirements.

Pros and cons depend on the project and it has to be decided individually. I have summarized some below which might help you make the right decision during the planning process.

| Pros | Cons | |

|---|---|---|

| + Bunch of publicly accessible providers | – Provider might store your data | |

| + Trained model with large amount of data | – Publicly accessible APIs can be compromised | |

| + Low effort of implementation | – Cannot modify trained model | |

| + Scalable cloud-based infrastructure | – High usage costs when processing large amount of data e.g. CCTV |

Common threats of implementing facial recognition

For demonstration purposes, let’s assume that we want to develop a banking mobile application which allows us to create a new account only with a phone. More and more financial institutions are allowing you to create new accounts while staying at home and only using the phone to verify its identity.

Process is user-friendly with few steps:

- User shows ID card,

- User performs liveness challenge which is randomly generated actions e.g. moving head or saying something so bank can make sure that user is aware of this process,

- Application performs face comparison of photos from ID card and video from liveness challenge to make sure that it’s the same person.

Our task is to create an algorithm that will verify that the person who solved the liveness challenge is the same person from the ID card. During the research I have implemented face comparison functions based on documentation provided by each face recognition service at that time. These functions allow sending two images which are processed by external services to extract faces and compare their similarity.

Using Azure Cognitive Services API

Based on Azure Face documentation we can use their SDK written in various languages such as .NET, Python and Go. We will focus on Python examples available at Quickstart: Use the Face client library – Azure Cognitive Services in the “Verify faces” section.

Example code:

Result of above code:

1 face(s) detected from image Family1-Dad3.jpg.

1 face(s) detected from image Family1-Son1.jpg.

1 face(s) detected from image Family1-Dad1.jpg.

1 face(s) detected from image Family1-Dad2.jpg.

Faces from Family1-Dad3.jpg & Family1-Dad1.jpg are of the same person, with confidence: 0.87954

Faces from Family1-Son1.jpg & Family1-Dad1.jpg are of a different person, with confidence: 0.26071What exactly happened here? To perform a face comparison method we are required to supply a face ids which are returned after detecting faces in the submitted images. Lets shorten given example to fit it to our case:

Above example works in most cases where supplied images contain only one face. However, the example from documentation does inform users that more faces were detected but it always takes the first one.

“In the API response, faces are listed in size order from largest to smallest.”

Microsoft Docs

What can go wrong:

- Example from documentation doesn’t expect more than one face in image and can result in inconsistency during comparison – make sure that correct faces were selected to the comparison process,

- IsIdentical parameter is always true if confidence is higher or equal to 0.5 – this should be tuned up based on the application.

Using Amazon Rekognition API

Let’s peek into Amazon’s documentation at Comparing faces in images – Amazon Rekognition and face comparison method using boto3 module.

Example code:

Result of above code:

The face at 0.3587000370025635 0.30205485224723816 matches with 99.96796417236328% confidence

Face matches: 1This time we are given less complex code that is developer-friendly. Function allows uploading images to be compared directly in the function as read bytes.

As a result developers receive information about each matched face followed by the number of matches. At the first glance we should be safe from Azure’s case where more faces could be sneaked in but let’s look into documentation once again.

“If you provide a source image that contains multiple faces, the service detects the largest face and uses it to compare with each face that’s detected in the target image.”

Amazon Docs

What can go wrong:

- Application has to make sure at its own that source image contains only one face, otherwise API will choose largest one during comparison,

- Response object returns also unmatched faces and above example only expect matched ones.

Using Face++ API

The last tested platform was Face++, with documentation containing a lot of intricacies, and example usage of their APIs available in Java and Python in Doc Center.

Example code from documentation which allows to detect faces on images:

It’s been a while since I last saw a code that would build multipart requests by hand. Above example works but let’s make it a bit shorter and more readable using the requests library.

Example of simplified code:

Based on the above simplified example, let’s create a function that will also make use of a comparison API.

As in the previous case we are allowed to send two images with faces to be compared. However, comparison is done only on the biggest faces found on each image.

“For image upload, the biggest face by the size of bounding box within the image will be used.”

Face++ Docs

What can go wrong:

- Application has to make sure on its own that there is only one face per image, otherwise API will choose largest one during comparison,

- Developers during implementing external service API might send key and secret via query string which is allowed. As a result the secret keys can be stored by load balances/proxies between application and public service.

How to fool face recognition with image

During research I was looking for logic flaws and inconsistency in uploaded images. Keep in mind that I was only manipulating input data like images without attacking APIs and infrastructure.

The main goal to achieve was uploading two images that at first glance doesn’t contain faces of the same person while service returns high confidence of match. With each case I will propose possible solutions that will protect you from mentioned abuses.

Hacking Face Comparision with Loopless GIF

| Azure Cognitive Services | Amazon Rekognition | Face++ |

| Vulnerable | N/A (doesn’t support GIFs) | N/A (doesn’t support GIFs) |

image1.gif

image2.png

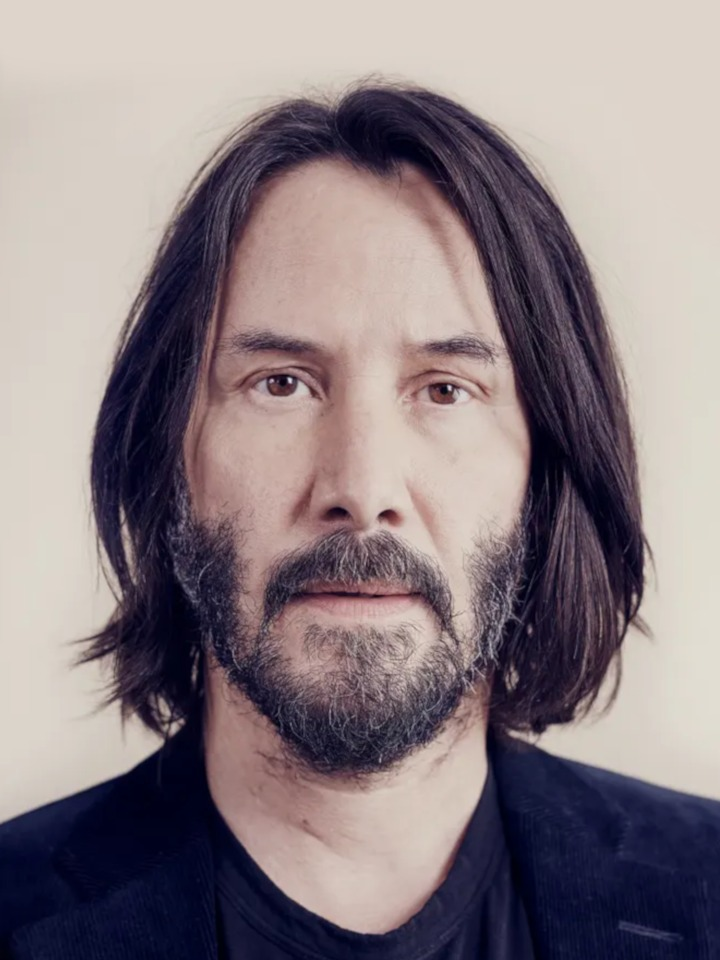

Comparison of above pictures results in a match with 98% confidence. But why exactly did that happen, while as you can see both pictures contain different faces and definitely I don’t look like Keanu?

What if I would tell you that image1.gif actually contains exactly the same face of Keanu as presented in image2.png? It is possible using GIF format because it contains many images called frames!

The trick that was used here is to construct a GIF file containing two frames, first one containing a picture of Keanu and second one with me. Last thing to make this work is switching off the flag that is responsible for looping frames indefinitely and choosing the lowest possible frame duration. This will result in displaying the last frame forever while the Keanu face will only flash once during page load.

As you’ve probably already figured out, the service is using the first frame of GIF during comparison as it was stated in their documentation.

Azure Docs

“The supported input image formats are JPEG, PNG, GIF (the first frame), BMP.”

Why did I actually consider this as dangerous? It may pose the risk in applications that display and process received GIF using visible image (most likely last one frame).

Unless you’re planning to compare each frame from GIF, try to avoid this format and convert it to the static image which will be processed further.

Attacking face comparison with Animated PNG

| Azure Cognitive Services | Amazon Rekognition | Face++ |

| Vulnerable | Vulnerable | Vulnerable |

image1.png

image2.png

Depending on the browser, which you have used to read this article, it might display the same picture of Keanu’s face if the browser doesn’t support APNG. It is similar to the Loopless GIF above which contains the first frame of Keanu’s face and mine while the number of loops is set to one. Exploiting this issue would require an application to display user images in environments that support Animated PNG which might confuse possible viewers.

This case is not described by the documentation because vendors might not be aware of APNG which might act like a normal PNG if software does not support it. It is possible because APNG has a feature which is a failsafe to display PNG when animated chunks are not supported.

To protect yourself from this, it is recommended to convert the received file into an image containing a single frame. This can be done by opening APNG file in any module e.g. PIL (Python) and saving it back with the desired extension.

Fooling Face Comparison with Transparent Layers in PNG

| Azure Cognitive Services | Amazon Rekognition | Face++ |

| Vulnerable | Vulnerable | Vulnerable |

Sending above images to the comparison methods returns a match with 95% confidence. In this scenario we are smuggling Keanu’s face, which is bigger than the visible one, in a transparent layer. Because of the low opacity of the hidden face and a fact that each service chooses the largest face from given images during comparison it is possible to fool it.

It is still possible to notice where the additional face is located after looking at the monitor from different angles or using dark background colours behind the image.

In a case where we would like to use white background instead of transparent then none of the tested APIs found a hidden face because it wasn’t visible enough. Using a black background makes it visible to anyone looking at the image.

So what really happened here? Why do APIs detect an image when it’s transparent?

At this point I can only guess why this happened since I don’t have any technical knowledge about API backend implementation and how the AI model receives input. Most likely given images are converted to raw RGB images which are passed to the algorithm. The problem starts with RGBA which is used by PNG. Additional letter A is responsible for Alpha value which defines the opacity level of each pixel.

There’s few ways to convert RGBA to RGB.

First, we can simply remove/ignore the alpha value which would result in the below comparison.

| Azure Cognitive Services | Amazon Rekognition | Face++ |

| 96% (detection_03) | 99% | 95% |

As you have already noticed, the distorted Keanu’s face is fully visible while transparent pixels have been replaced with black colour. This happened because RGBA transparent pixels whose values are (0, 0, 0, 0) have been truncated into RGB structure of (0, 0, 0) that is represented as black colour.

Another way is to convert RGBA to RGB with common formula:

When the background is transparent entirely (default black with 0% visibility) then the above formula could have been optimised to the pixel opacity level from the range 0 – 1 multiplied by pixel colour value. Below example was calculated with a given formula with default black background colour.

| Azure Cognitive Services | Amazon Rekognition | Face++ |

| 74% (detection_03) | 99% | 95% |

The above example is less visible than its predecessor and external providers returned different similarity to Keanu because of its lower level of visibility. The Keanu image was displayed with 1% opacity.

First, a countermeasure to this attack would be denying images which contain more than one face. It would block our test-case and create an opportunity to bypass it by hiding visible faces by some distortions or covering it.

Another, more secure approach is to create an image without transparent layers for example by using a transforming formula rgba2rgb which would allow another person to notice that there’s a hidden face.

SecuRing your face recognition implementations

Developing software with a security mindset is often a hard task. If you’re already using or planning to use external providers to process images with facial recognition, find a moment to check the below list which presents changes that might lower potential risk of abuse.

- If you’re about to perform any form of authentication based on the similarity of the same person from two images make sure there’s only one visible face on each image,

- Good practice is to unify images to one format before storing or comparing instead of using all formats that the external provider accepts,

- Avoid sending transparent images and GIFs – it’s better to keep control over processed images without potential misunderstanding by external service,

- Add quality check performed by humans to catch any inconsistencies that could occur during comparisons leading to create false-positives,

- Protect your API keys by storing it in a safe place and using secure connections to avoid leaking it.

Summary

Facial recognition is a powerful tool that can be used to convert any face to a digital set of information which is processed by AI to perform anything from comparison similarity to detecting ethnicity and emotions. It is important to keep it secure and when it comes to using external providers it’s better to add additional security layers on our end.

Also, systems that fully rely on the automatic solution might suffer from various tricks used by attackers. Since the real consequences depend on each application, they might be different.

One of the most common uses is to bypass authentication while preserving the original attacker face in the picture. Financial institutes commonly use additional human factors performing verification on second hand confirming automatic results which could have been also tricked.

It is always worth approaching the implementation of new solutions with a certain reserve of trust. With my research, I wanted to show that even “popular” API’s can turn out to be vulnerable, which in turn can have serious consequences for your organization. If you have any questions about this article, feel free to contact us via the contact form.